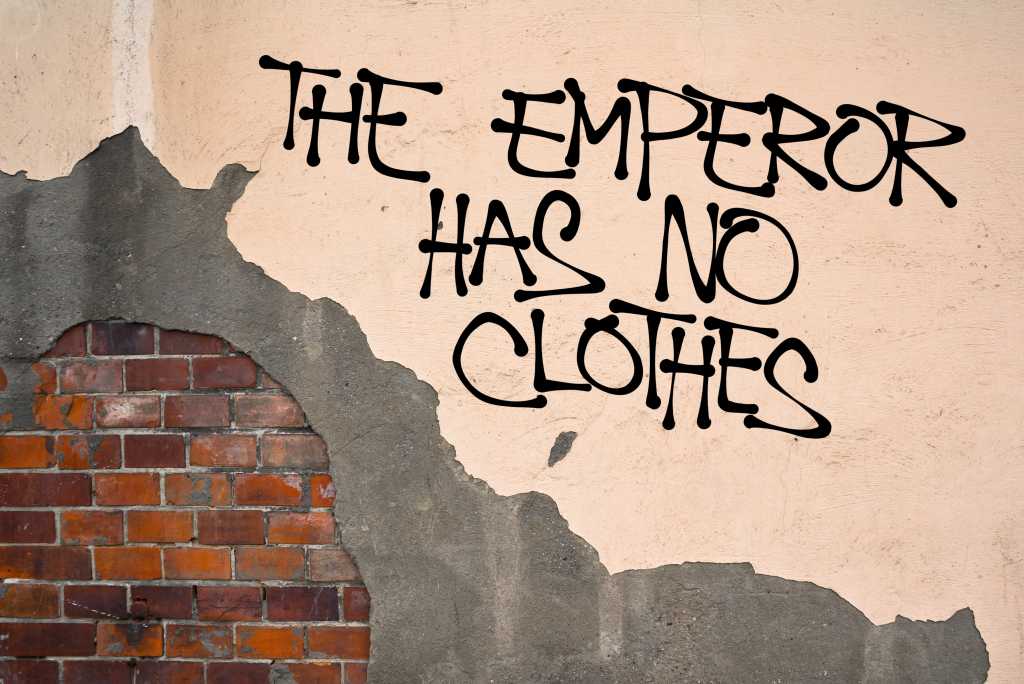

“Today’s models are really just predicting the next word in a text, he says. But they’re so good at this that they fool us. And because of their enormous memory capacity, they can seem to be reasoning, when in fact they’re merely regurgitating information they’ve already been trained on.

“‘We are used to the idea that people or entities that can express themselves, or manipulate language, are smart — but that’s not true,’ says LeCun. ‘You can manipulate language and not be smart, and that’s basically what LLMs are demonstrating.’”

That is the key issue. Enterprises are putting far too much faith in genAI systems, says Francesco Perticarari, general partner at technology investment house Silicon Roundabout Ventures in London, England.

It’s easy to assume that the rare correct answers given by these tools are flashes of brilliance, rather than the genAI having gotten a lucky guess. But “the output is not based at all on reasoning. It is merely based on extremely powerful computing,” Perticarari said.

Putting genAI in the driver’s seat

One frequently cited selling point for genAI is that some models have proven quite effective at passing various state bar exams. But those bar exams are ideal environments for genAI, because the answers are all published. Memorizations and regurgitation are ideal uses for genAI, but that doesn’t mean genAI tools have the skills, understanding, and intuition to practice law.