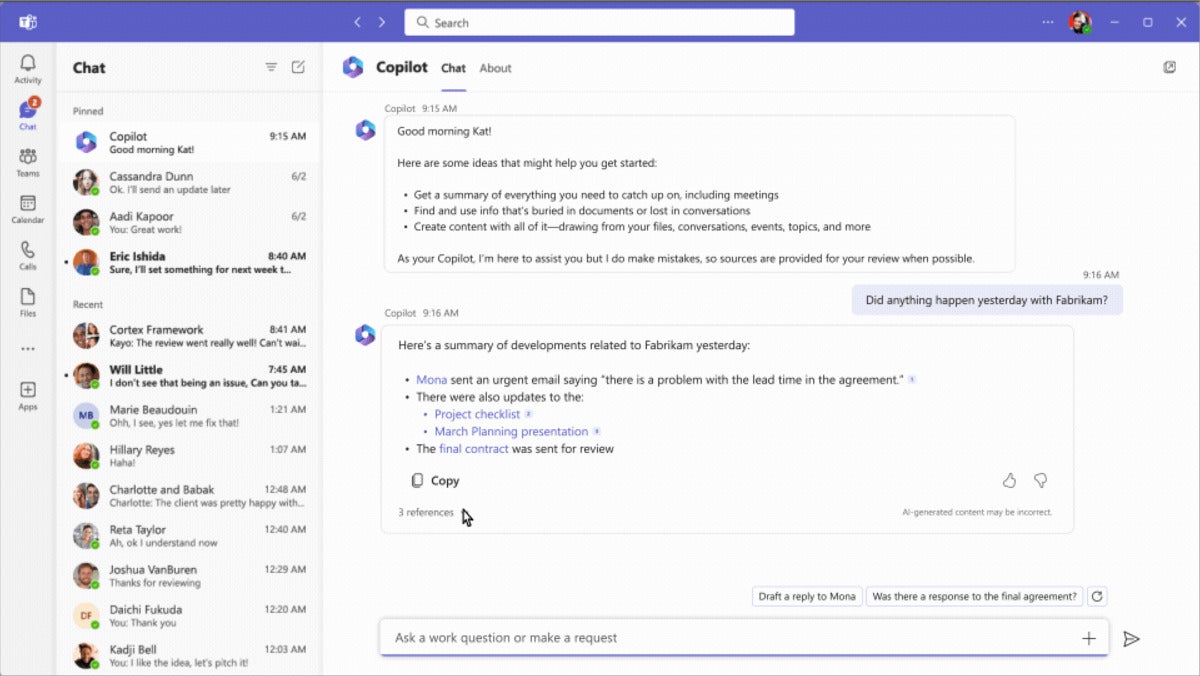

The other way to interact with Copilot is via Microsoft 365 Chat, which is accessible as a chatbot with Teams. Here, Microsoft 365 Chat works as a search tool that surfaces information from a range of sources, including documents, calendars, emails, and chats. For instance, an employee could ask for an update on a project, and get a summary of relevant team communications and documents already created, with links to sources.

Microsoft will extend Copilot’s reach into other apps workers use via “plugins” — essentially third-party app integrations. These will allow the assistant to tap into data held in apps from other software vendors including Atlassian, ServiceNow, and Mural. Fifty such plugins are available, with “thousands” more expected eventually, Microsoft said.

How are early customers using Copilot?

Prior to launch, many businesses accessed the Copilot for M365 as part of a paid early access program (EAP); it began with a small number of participants before growing to several hundred customers, including Chevron, Goodyear, and General Motors.

One of those involved in the EAP was marketing firm Dentsu, which began deploying 300 licenses to tech staff and then employees across its business lines globally. The most popular use case so far is summarization of information generated in M365 apps — a Teams call being one example.

“Summarization is definitely the most common use case we see right out of the box, because it’s an easy prompt: you don’t really have to do any prompt engineering…, it’s suggested by Copilot,” Kate Slade, director of emerging technology enablement at Dentsu, said.

Staffers would also access M365 Chat functions to prepare for meetings, for instance, with the ability to quickly pull information from different sources. This could mean finding information from a project several years ago “without having to hunt through a folder maze,” said Slade.

The feedback from workers at Dentsu has been overwhelmingly positive, said Slade, with a waiting list now in place for those who want to use the AI tool.

“It’s reducing the time that they spend on [tasks] and giving them back time to be more creative, more strategic, or just be a human and connect peer to peer in Teams meetings,” she said. “That’s been one of the biggest impacts that we’ve seen…, just helping make time for the higher-level cognitive tasks that people have to do.”

Use cases have varied between different roles. Denstu’s graphic designers would get less value from using Copilot in PowerPoint, for example: “They’re going to create really visually stunning pieces themselves and not really be satisfied with that out-of-the-box capability,” said Slade. “But those same creatives might get a lot of benefits from Copilot in Excel and being able to use natural language to say, ‘Hey, I need to do some analysis on this table,’ or ‘What are key trends from this data?’ or ‘I want to add a column that does this or that.’”

Most vendors in the productivity and collaboration software market have added genAI to their offerings at this point.

Google, Microsoft’s main competitor in the productivity software arena, launched DuetAI for Workspace in 2023, and rebranded to Gemini Enterprise ($30 per user each month) and Gemini Business ($20 user each month). Google’s AI assistant can summarize Gmail conversations, draft texts, and generate images in Workspace apps such as Docs, Sheets,and Slides.

Slack, the collaboration software firm owned by Salesforce and a rival to Microsoft Teams, launched its Slack AI feature in February. Other firms that compete with elements of the Microsoft 365 portfolio, such as Zoom, Box, Coda, and Cisco, have also touted genAI plans.

Meanwhile, Apple announced that it will build generative AI features into its range of productivity tools.

Then there are the AI specific tools, such as OpenAI’s ChatGPT, as well as Claude, Perplexity AI, Jasper AI and others, that provide also provide text generation and document summarization features.

Copilot has some advantages over rivals. One is Microsoft’s dominant position in the productivity and collaboration software market, said Castañón. “The key advantage the Microsoft 365 Copilot will have is that — like other previous initiatives such as Teams — it has a ‘ready-made’ opportunity with Microsoft’s collaboration and productivity portfolio and its extensive global footprint,” he said.

Microsoft’s close partnership with OpenAI (Microsoft has invested billions of dollars in the company on several occasions since 2019 and has a large non-controlling share of the business), likely helped it build generative AI across its applications at faster rate than rivals.

“Its investment in OpenAI has already had an impact, allowing it to accelerate the use of generative AI/LLMs in its products, jumping ahead of Google Cloud and other competitors,” said Castañón.

What are the genAI risks for businesses? ‘Hallucinations’ and data protection

Along with the potential benefits of genAI tools like the Copilot for M365, businesses should consider risks. These include the hallucinations large language models (LLMs) are prone to, where incorrect information is provided to employees.

“Copilot is generative AI — it definitely can hallucinate,” said Slade, citing the example of one employee who asked the Copilot to provide a summary of pro bono work completed that month to add to their timecard and send to their manager. A detailed two-page summary document was created without issue; however, the address of all meetings was given as “123 Main Street, City, USA” — an error that’s easily noticed, but an indication of the care required by users when relying on Copilot.

The occurrence of hallucinations can be reduced by improving prompts, but Dentsu staff have been advised to treat outputs from the genAI assistant with caution. “The more context you can give it generally, the closer you’re going to get to a final output,” said Slade. “But it’s never going to replace the need for human review and fact check.

“As much as you can, level-set expectations and communicate to your first users that this is still an evolving technology. It’s a first draft, it’s not a final draft — it’s going to hallucinate and mess up sometimes.”

Tools that filter Copilot outputs are emerging that could help here, said Litan, but this is likely to remain a key challenge for businesses for the forseeable future.

Another risk relates to one of the major strengths of the Copilot: its ability to sift through files and data across a company’s M365 environment using natural language inputs.

While Copilot is only able to access files according to permissions granted to individual employees, the reality is that businesses often fail to adequately label sensitive documents. This means individual employees might suddenly realize they are able to ask Copilot to provide details on payroll or customer information if it hasn’t been locked down with the right permissions.

A 2022 report by data security firm Varonis claimed that one in 10 files hosted in SaaS environments is accessible by all staff; an earlier 2019 report put that figure — including cloud and on-prem files and folders — at 22%. In many cases, this can mean organization-wide permissions are granted to thousands of sensitive files, Varonis said.

In many cases, the most important data, around payroll, for instance, will have strict permissions in place. A greater challenge lies in securing unstructured data, with sensitive information finding its way into a wide range of documents created by individual employees — a store manager planning payroll in an Excel spreadsheet before updating a central system, for example. This is similar to a situation that the CTO of an unnamed US restaurant chain encountered during the EAP, said Litan.

“There’s a lot of personal data that’s kept on spreadsheets belonging to individual managers,” said Litan. “There’s also a lot of intellectual property that’s kept on Word documents in SharePoint or Teams or OneDrive.”

“You don’t realize how much you have access to in the average company,” said Matt Radolec, vice president for incident response and cloud operations at Varonis. “An assumption you could have is that people generally lock this stuff down: they do not. Things are generally open.”

Another consideration is that employees often end up storing files relating to their personal lives on work laptops.

“Employees use their desktops for personal work, too — most of them don’t have separate laptops,” said Litan. “So you’re going to have to give employees time to get rid of all their personal data. And sometimes you can’t, they can’t just take it off the system that easily because they’re locked down — you can’t put USB drives in [to corporate devices, in some cases].

“So it’s just a lot of processes companies have to go through. I’m on calls with clients every day on the risk. This one really hits them.”

Getting data governance in order is a process that could take businesses more than a year to get sorted, said Litan. “There are no shortcuts. You’ve got to go through the entire organization and set up the permissions properly,” she said.

In Radolec’s view, very few M365 customers have yet adequately addressed the risks around data access within their organization. “I think a lot of them are just planning to do the blocking and tackling after they get started,” he said. “We’ll see to what degree of effectiveness that is [after launch]. We’re right around the corner from seeing how well people will fare with it.”

The Copilot for M365 pros and cons

Pros:

- Boost to productivity. GenAI features can save time for users by automating certain tasks.

- Breadth of features. Copilot for M365 is built into the productivity apps that many workers use on a daily basis, including Word, Excel, Outlook and Teams.

- Responses generated by the Copilot for M365 are anchored in the emails, files, calendars, meetings, contacts, and other information contained in Microsoft 365. This means the Copilot for M365 can arguably offer greater insights into work data than any other generative AI tool.

- Enterprise-grade privacy and security controls. Unlike consumer AI assistants, Microsoft promises that customer data won’t be used to train Copilot models. It also offers tools to help manage access to data in M365 apps.

Cons:

- Price. GenAI isn’t cheap and M365 customers are required to pay a significant additional fee each month for access to Copilot features. An individual employee might not need access to Copilot in more than a couple ofM365 apps.

- Need for employee training. Getting the most out of genAI tools will require guidance around effective prompts, particularly for employees that are unfamiliar with the technology — an additional cost businesses must factor in.

- Accuracy and hallucinations. LLMs are notoriously unreliable, confidently offering answers that are incorrect. This is a particular concern when it comes to business data, and users must be on the lookout for errors in Copilot outputs.

- Data protection risks. The ability for Copilot for M365 to access a wide range of corporate data means businesses must be careful to ensure that sensitive documents are not exposed.

- The Copilot functionality in Excel is limited at this stage.